Some users ofInstagram took advantage of the social network to exchange child pornography images. Quickly alerted by its users, the network reacted by limiting certain hashtags.

Child pornography is a scourge that plagues the internet. Obviously illegal, the exchange of such images is punishable by law, but that does not prevent its authors from finding new means of communicating with each other in public places. To do this, they use certain coded languages, which sometimes end up coming out into the open.

This is what happened on Instagram, the social network dedicated to Facebook photos. Although this one is not particularly designed for private communications or file exchanges, some have managed to hijack it by using seemingly innocuous hashtags. The main hashtags, #dropboxlinks and #tradedropbox, used on quite banal photos, allowed pedophiles to get in touch with each other and thus to privately exchange illegal content via file sharing platforms like DropBox.

These hashtags could sometimes be accompanied by unfortunately much more explicit variations like #gay13years, or comments like “I have links” or “I exchange boys for girls”, reports TNW, screenshots in support.

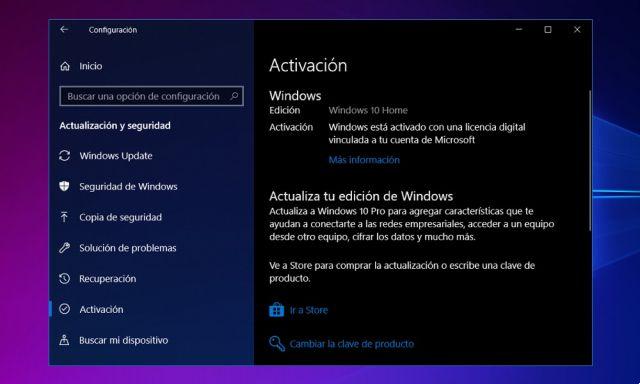

Instagram has stemmed the problem in its own way by making the hashtags in question inaccessible. A message now reads "Posts for #dropboxlinks have been restricted because the community flagged content that may violate the Instagram Community Guidelines." Instagram's rules recall that the use of the social network is done in compliance with the laws, and that "zero tolerance is applied to the sharing of sexual content involving minors".

Instagram also said it was "developing technology that proactively finds content related to child pornography and child exploitation as soon as it is uploaded so it can respond quickly."

Despite this hashtag limitation, several people who reported the affected accounts received a response from Instagram stating that the users in question are not violating the platform's terms of service — since the content is not hosted directly on the platform. — and the accounts were therefore not deleted according to The Atlantic.

The community to the rescue

Contacted by TNW, Dropbox obviously condemned this use of its service and promises to work with Instagram to ensure that this type of content is removed from its hosting service as soon as possible.

As for the misappropriation of the use of the social network, it is the Instagram community itself which has tried to drown out the practice by massively publishing memes on the hashtags in question in order to make contact with pedophiles more difficult. .

This shows in particular the difficulty for platforms to stem this kind of behavior from users who always find a way to circumvent blockages and come back with new methods.